PySpark Delta Transactions in Microsoft Fabric Ep. 3

Explore PySpark in Microsoft Fabric: Master Delta Transactions & Optimize Tables with Pragmatic Works!

Key insights

- Learn how to handle delta transactions and maintenance using PySpark in Microsoft Fabric.

- Understand the importance of Delta Lake and how to effectively leverage its capabilities for better data management.

- Explore different transaction isolation levels to maintain data consistency across various operations.

- Discover maintenance techniques such as database compaction and vacuuming to optimize Delta tables.

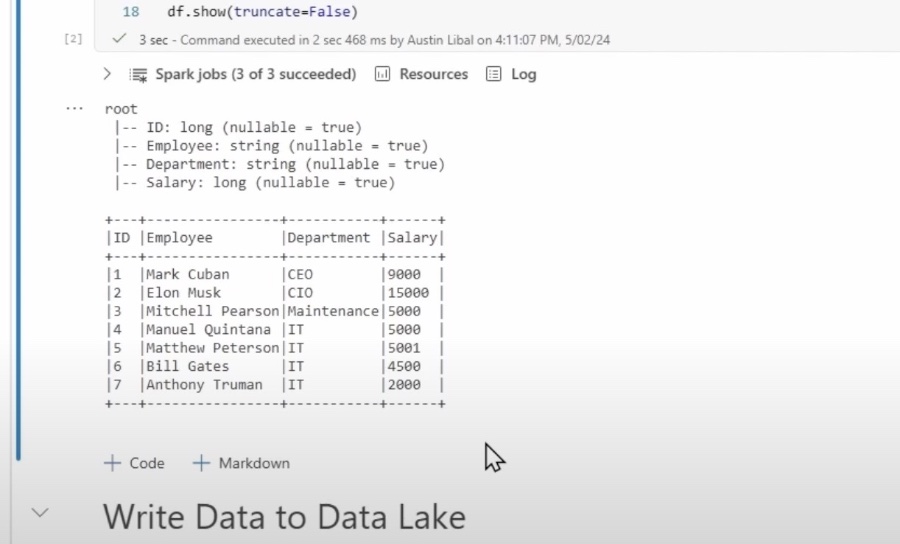

- Follow a practical guide on setting up the environment, creating, modifying, and optimizing data within Delta Lake using PySpark.

Exploring PySpark and Delta Lake in Microsoft Fabric

PySpark, integrated within platforms like Microsoft Fabric, is pivotal in managing and processing large datasets efficiently. Its compatibility with Delta Lake enhances its utility by providing robust transaction management and optimization features, which are essential for modern data architectures. The combination of PySpark and Delta Lake facilitates a scalable and reliable environment for data operations, making complex tasks like data maintenance, optimization, and consistency achievable with reduced effort. This synergy supports businesses in harnessing the full potential of their data, driving insights and decisions that are critical in today’s data-driven world.

Overall, the utilization of PySpark in conjunction with tools like Delta Lake in platforms like Microsoft Fabric underscores a significant advancement in data processing and management technologies. As data continues to grow in volume and importance, these technologies will play a crucial role in shaping the future of data-driven enterprises.

Delta Lake is showcased as a powerful tool for handling large amounts of data with ease. The video explains the different transaction isolation levels available in Delta Lake, highlighting their importance in maintaining data consistency across multiple operations.

Viewers are guided on how to execute maintenance practices such as database compaction and vacuuming. These procedures are crucial for optimizing the performance of Delta tables, ensuring that they operate efficiently and smoothly.

People also ask

How do you read Delta data in PySpark?

How to install delta in PySpark?

The installation can be performed directly within the PySpark shell.What is Microsoft Fabric?

Microsoft Fabric is a comprehensive analytics and data platform tailored for enterprise-level needs. It integrates functionalities for data movement, processing, ingestion, transformation, and real-time event handling, along with capabilities for constructing analytical reports.What is DLT Databricks?

Delta Live Tables (DLT) forms part of the Databricks Data Intelligence Platform, serving as a declarative ETL framework. It is designed to aid data teams in streamlining both streaming and batch ETL processes in a manner that is cost-effective.

Keywords

PySpark Microsoft Fabric, Delta Transactions PySpark, PySpark Maintenance, Microsoft Delta Lake, PySpark Episode 3, Delta Transactions Tutorial, Managing PySpark Delta Lake, PySpark Fabric Integration