- All of Microsoft

Introduction to PySpark in Microsoft Fabric 2024 Update

Unlock big data potential with PySpark in Microsoft Fabric - Your guide to scalable analytics and seamless integration!

Key insights

- PySpark is a Python interface for Apache Spark, designed for big data analytics.

- Microsoft Fabric, formerly Azure HDInsight, offers cloud-based big data analytics with managed Spark clusters.

- Using PySpark in Microsoft Fabric enhances scalability, ease of use, integration with Azure services, and optimized performance.

- Key concepts include DataFrames, RDDs (Resilient Distributed Datasets), Spark SQL, and Microsoft Fabric Notebooks for data analysis.

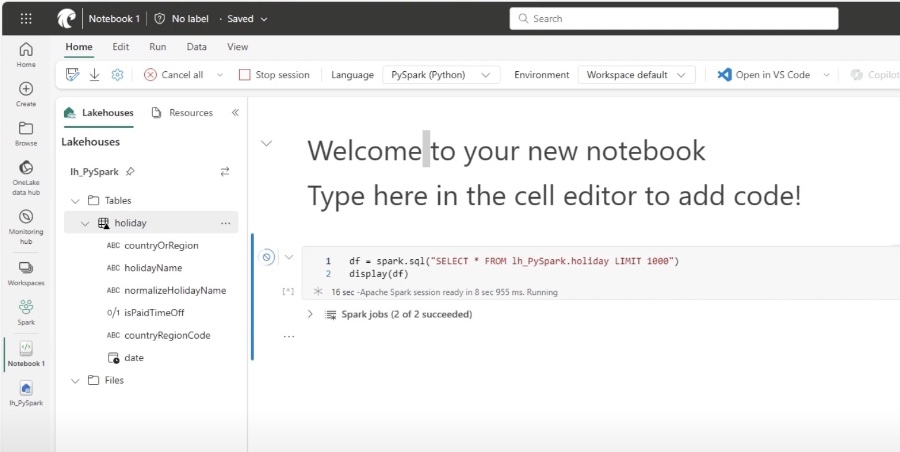

- To get started with PySpark in Fabric, create a workspace, a PySpark notebook, import libraries, create a SparkSession, load data, and then explore/manipulate it.

Understanding PySpark and Its Significance in Big Data Analytics

PySpark is an essential tool in the arsenal of data scientists and analysts working with large scale data processing. It effectively combines the power of Spark's distributed computing engine with the simplicity and versatility of Python, making it a popular choice for big data analytics. The integration of PySpark with Microsoft Fabric substantially simplifies the complexities involved in deploying and managing big data applications. It leverages Azure’s powerful cloud infrastructure to provide optimized performance and scalability, helping businesses efficiently process massive datasets.

With Microsoft Fabric, users gain access to managed Spark clusters, significantly reducing the overhead associated with setting up and configuring Spark environments. This integration supports a broad range of data analysis tasks from exploratory data analysis to complex data transformations and aggregations, using familiar constructs like DataFrames and SQL queries. More importantly, PySpark’s compatibility with other Azure services opens up endless possibilities for advanced analytics and machine learning workflows, making it an invaluable tool for organizations aiming to derive actionable insights from their data. Whether starting your big data journey or looking to streamline existing workflows, PySpark in Microsoft Fabric offers a powerful, flexible, and efficient solution.

In Episode 1, "Pragmatic Works" provides an introduction to PySpark in Microsoft Fabric, shedding light on the fundamentals of the renowned big data processing framework, Spark, and how to effectively utilize PySpark for data manipulation and analysis. This episode serves as a beginner's guide, emphasizing the seamless integration of PySpark, a Python interface for Spark, with the versatile data management platform, formerly known as Azure HDInsight. The focus is on delivering a clear understanding of how PySpark can be an invaluable tool in managing and analyzing vast datasets.

PySpark represents a bridge between the powerful Spark engine and the flexible Python programming language, enabling the analysis and processing of large-scale data analytics. Microsoft Fabric simplifies the deployment and scaling of Spark applications, eliminating the complexities of infrastructure management. The tutorial highlights the advantages of using PySpark within Microsoft Fabric, including scalability, ease of use, comprehensive integration with Azure services, and optimized performance for Azure's infrastructure. This ensures that viewers can tackle demanding data challenges efficiently.

The core concepts covered include DataFrames and RDDs (Resilient Distributed Datasets), enabling structured data manipulation, and Spark SQL for SQL-like data querying. Microsoft Fabric Notebooks are introduced as dynamic tools for combining Python code, visualizations, and text in an interactive web interface, facilitating exploratory data analysis and teamwork. Practical steps for getting started with PySpark in Microsoft Fabric, such as setting up a workspace, creating a PySpark notebook, and loading data for analysis, are clearly laid out with code examples for immediate application.

The instructional content shifts to a pragmatic approach with an example of analyzing sales data, showcasing the process from loading data to calculating and visualizing total revenue per product category. This demonstrates PySpark's powerful capability for data exploration and manipulation in real-world scenarios. The post concludes by inviting viewers to explore further specifics on PySpark, advanced use cases, or how it integrates with other components of the platform, emphasizing the series' commitment to providing comprehensive insights into big data analytics with PySpark in Microsoft Fabric.

Exploring PySpark in Big Data Analytics

PySpark has emerged as a powerful tool for big data analytics, particularly when used within Microsoft Fabric. This combination provides a robust environment for processing large datasets, leveraging the strengths of Spark's processing capabilities and the versatility of Python. PySpark in Microsoft Fabric enables businesses to scale their data processing capabilities, manage complex datasets efficiently, and integrate seamlessly with various Azure services for an enhanced analytics ecosystem.

The use of DataFrames and RDDs in PySpark facilitates sophisticated data manipulation and analysis processes, supporting businesses in deriving meaningful insights from their data. Spark SQL extends these capabilities by allowing users to query data in a manner similar to traditional SQL, making the analytics process more familiar and accessible. Through Microsoft Fabric Notebooks, users can perform exploratory data analysis, share insights, and collaborate effectively, making it a go-to tool for data scientists and analysts.

Setting up and starting with PySpark in Microsoft Fabric is straightforward, encouraging users to engage with the platform and explore its multitude of features. Importing libraries, creating Spark Sessions, and loading data are fundamental steps that pave the way for advanced data exploration and manipulation tasks. Practical examples, such as analyzing sales data, illustrate the applicability of PySpark in real-world scenarios, showcasing its potential to drive business decisions through data-driven insights.

Ultimately, PySpark within Microsoft Fabric presents a unique opportunity for organizations to harness the power of big data analytics. It offers a scalable, easy-to-use platform that integrates with a wide range of Azure services, leading to optimized performance and enhanced analytical outcomes. With ongoing support and extensive documentation available, users can continually expand their knowledge and leverage PySpark's full capabilities to meet their data analysis needs.

People also ask

Questions and Answers about Microsoft 365

"What is Spark in Microsoft fabric?"Microsoft Fabric prominently features Apache Spark as a pivotal technology for extensive data analytics. By supporting Spark clusters within Microsoft Fabric framework, it empowers users with the capability to perform significant data analysis and processing in a Lakehouse environment, managing data at an extensive scale. "What is the introduction of PySpark programming?"

Delving into PySpark reveals its essence as a collaborative effort between Apache Spark and Python specifically aimed at handling Big Data challenges. Originating from UC Berkeley's AMP Lab, Apache Spark is a robust, open-source, cluster-computing framework designed for expansive data management and is incorporated with the versatile and high-level Python programming language for an integrated Big Data solution. "Which language can be used to write code for Spark in Microsoft fabric?"

Within the versatile environment of Fabric notebooks, users are presented with the flexibility to employ one of four languages specifically tailored for Apache Spark development: PySpark (leveraging Python), Spark (utilizing Scala), and Spark SQL, catering to various programming preferences and requirements in Spark-based applications. "What is the purpose of PySpark?"

PySpark stands as the Python API for Apache Spark, crafted to bridge Apache Spark's scalable data processing capabilities with Python’s simplicity and accessibility. It equips users with the power to undertake real-time, vast-scale data operations in a distributed setting, utilizing Python to facilitate extensive data handling and analysis processes.

Keywords

PySpark, Microsoft Fabric, PySpark Tutorial, Microsoft Synapse, Spark in Azure, Big Data Analytics, Azure Databricks, PySpark Introduction