Boost Power BI Efficiency with New Direct Lake Model

Discover the Future of Data Analysis: Master Power BIs Direct Lake for High-Speed Insights

Key insights

- Optimize Power BI models using the new Direct Lake semantic model to efficiently handle large data volumes without the need for duplicating data.

- Direct Lake mode combines the benefits of both DirectQuery and import modes, enabling immediate reflection of data source changes and high-performance analysis similar to import mode.

- Direct Lake supports complex security features like row-level and object-level security, ensuring users access only the data they are permitted to see.

- Setting up Direct Lake requires provisioning a lakehouse, and it's supported only on Microsoft Premium (P) and Fabric (F) SKUs.

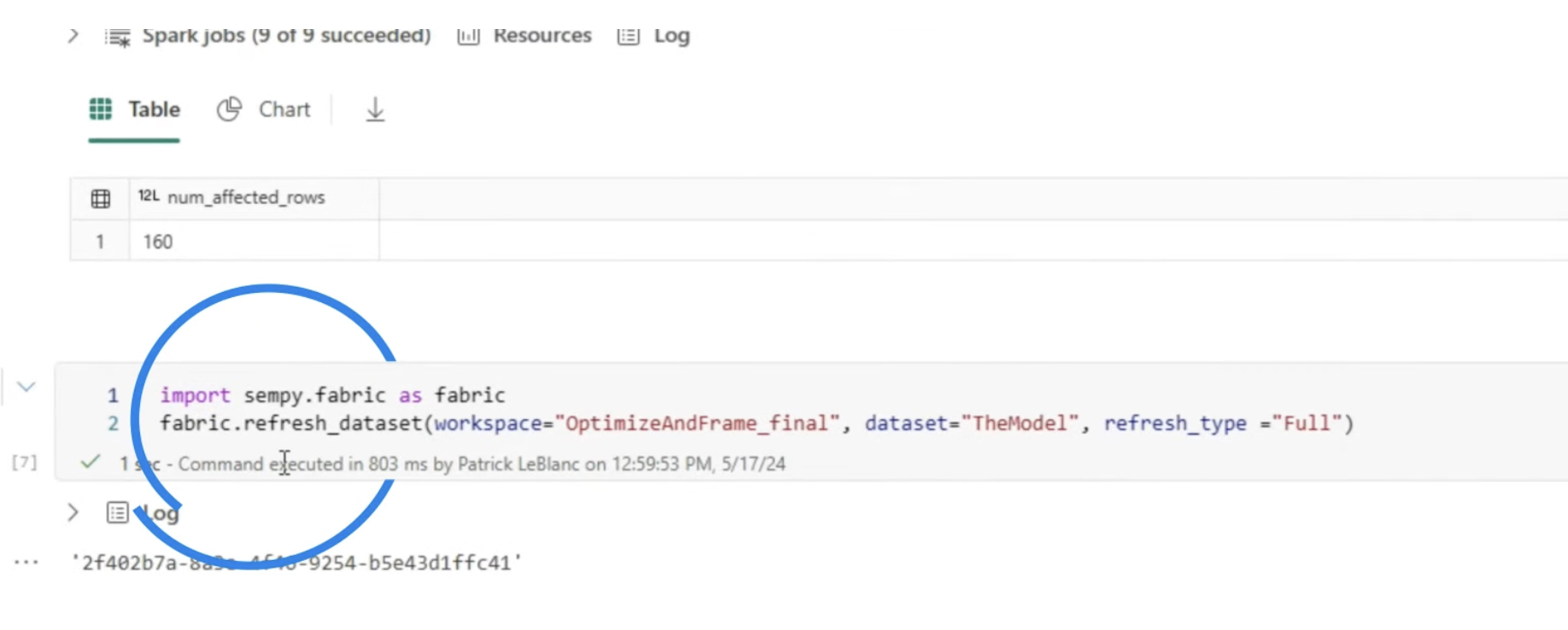

- Advanced interactions with Direct Lake semantic models via the XMLA endpoint support customizations, scripting, and automation tasks which can significantly enhance management and operational flexibility.

Exploring Direct Lake in Power BI

Optimize Power BI with New Direct Lake Semantic Model Want to know how to make sure you see the latest data when using Direct Lake in your Power BI semantic models? Patrick shows you two options you have and looks at the concept of reframing.

Direct Lake mode is a semantic model capability for analyzing very large data volumes in Power BI. Direct Lake is based on loading parquet-formatted files directly from a data lake without having to query a lakehouse or warehouse endpoint, and without having to import or duplicate data into a Power BI model. Direct Lake is a fast-path to load the data from the lake straight into the Power BI engine, ready for analysis.

In DirectQuery mode, the Power BI engine queries the data at the source, which can be slow but avoids having to copy the data like with import mode. Any changes at the data source are immediately reflected in the query results. On the other hand, with import mode, performance can be better because the data is cached and optimized for DAX and MDX report queries without having to translate and pass SQL or other types of queries to the data source.

However, the Power BI engine must first copy any new data into the model during refresh. Any changes at the source are only picked up with the next model refresh. Direct Lake mode eliminates the import requirement by loading the data directly from OneLake. Unlike DirectQuery, there's no translation from DAX or MDX to other query languages or query execution on other database systems, yielding performance similar to import mode.

Because there's no explicit import process, it's possible to pick up any changes at the data source as they occur, combining the advantages of both DirectQuery and import modes while avoiding their disadvantages. Direct Lake mode can be the ideal choice for analyzing very large models and models with frequent updates at the data source. Direct Lake also supports row-level security and object-level security so users only see the data they have permission to see.

Direct Lake is supported on Microsoft Premium (P) SKUs and Microsoft Fabric (F) SKUs only. For new customers, Direct Lake is supported on Microsoft Fabric (F) SKUs only. Existing customers can continue to use Direct Lake with Premium (P) SKUs, but transitioning to a Fabric capacity SKU is recommended. See the licensing announcement for more information about Power BI Premium licensing.

Before using Direct Lake, you must provision a lakehouse (or a warehouse) with one or more Delta tables in a workspace hosted on a supported Microsoft Fabric capacity. The lakehouse is required because it provides the storage location for your parquet-formatted files in OneLake. The lakehouse also provides an access point to launch the Web modeling feature to create a Direct Lake model.

To learn how to provision a lakehouse, create a Delta table in the lakehouse, and create a basic model for the lakehouse, Patrick guides you through the process. As part of provisioning a lakehouse, a SQL endpoint for SQL querying and a default model for reporting are created and updated with any tables added to the lakehouse. While Direct Lake mode doesn't query the SQL endpoint when loading data directly from OneLake, it's required when a Direct Lake model must seamlessly fall back to DirectQuery mode.

Exploring the Direct Lake Semantic Models in Power BI

The introduction of Direct Lake in Power BI has transformed how businesses can utilize massive datasets without the usual performance setbacks associated with traditional models. This innovation allows for real-time data analysis directly from the source, essentially negating the need for tedious data importing or duplicating. It seamlessly integrates various user access levels, ensuring secure data handling and personalized data interaction based on user permissions.

With features designed to support large-scale business environments, Direct Lake caters to organizations requiring instant data refresh capabilities and those exploring complex data structures in their analytical pursuits. Emphasizing a shift towards more immediate and efficient data processing methods, Direct Lake stands out as a pioneering solution in a data-driven business landscape. It provides a robust platform for businesses to leverage real-time analytics, thus maintaining a competitive edge by making quicker and more informed decisions.

People also ask

"What are the limitations of Direct Lake mode in Power BI?"

Direct Lake mode in Power BI has specific constraints wherein semantic model tables can only be sourced from a single Lakehouse or Warehouse. Tables from Direct Lake cannot be combined with other types such as Import, DirectQuery, or Dual within the same semantic model. Furthermore, the use of composite models is not currently supported in Direct Lake mode.

"How to improve the performance of direct query in Power BI?"

To enhance direct query performance in Power BI, one effective strategy is to increase the Maximum Connections per Data Source setting. This adjustment allows more queries to be sent concurrently to the data source, which is particularly beneficial in scenarios involving complex reports with multiple visuals or high concurrent user access.

"What are the limitations of semantic model in Power BI?"

The semantic models in Power BI Desktop are subject to a size cap of 10 GB, which remains unchanged even if Power BI Premium is utilized. Additionally, on the standard Power BI service, there is a limitation on the model refresh frequency, which is restricted to no more than eight refreshes per day.

"How do I update a semantic model in Power BI?"

When significant changes are made in Power Query and Datamart, these modifications are not automatically synchronized to the semantic model. It is necessary to refresh the report manually in Power BI Desktop to verify and apply any required changes before republishing the semantic model.

Keywords

Optimize Power BI, Direct Lake Semantic Model, Power BI performance, Semantic modeling in Power BI, Direct Query Power BI, Power BI optimizations, Lakehouse Power BI, Power BI best practices