Landing data with Dataflows Gen2 in Microsoft Fabric

Pipelines are cool in Microsoft Fabric, but how could we use Dataflows to get data into our Data Warehouse? Patrick shows another way to move your data with jus

Landing data with Dataflows Gen2 in Microsoft Fabric Pipelines can transform the way you tackle data needs. Dataflows in Microsoft Fabric offer an easy and efficient way to move your data into your Data Warehouse. Simply connect to the relevant data sources, prepare and transform the data, and land it directly into your Lakehouse or use a data pipeline for other destinations.

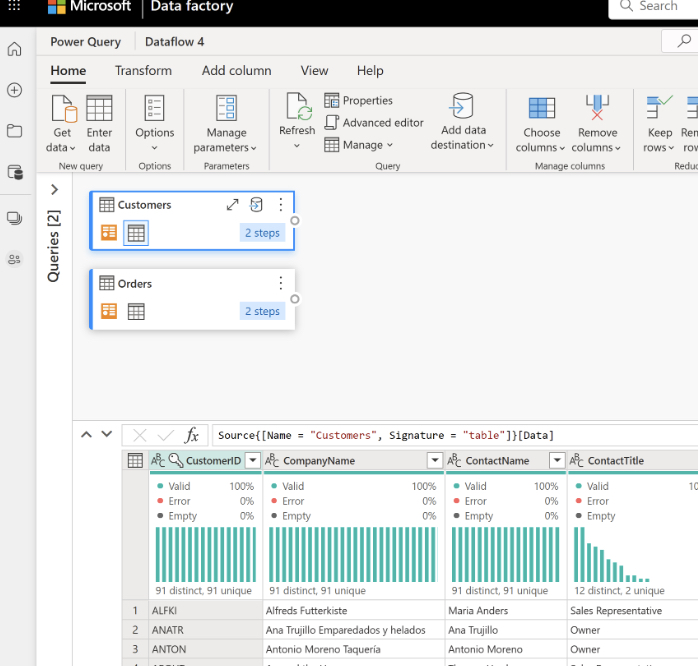

But what is a dataflow? It's essentially a cloud-based ETL (Extract, Transform, Load) tool for building and executing scalable data transformation processes. Using Power Query Online, you can extract, transform, and load data from a wide range of sources, and load it into a destination. The fundamental role of a dataflow is to reduce data prep time and can then be loaded into a new table, included in a Data Pipeline, or used as a data source by data analysts.

- Traditionally, data engineers spend considerable time extracting, transforming, and loading data into a usable format for downstream analytics. However, Dataflows Gen2 offers an easy, reusable ETL process using Power Query Online.

- Alternatively, create a Dataflow Gen2 to extract and transform the data and then load the data into a Lakehouse and other destinations.

- Dataflows preserve all transformation steps and can be used to perform other tasks or load data to different destinations after transformation.

- Dataflows can also connect to Lakehouse data to cleanse and transform data. This is particularly useful for creating a Data Pipeline and Dataflow Gen2 for an ELT (Extract, Load, Transform) process.

- Dataflows can be partitioned horizontally, allowing data analysts to create specialized datasets for specific needs. They essentially help prevent the need to create more connections to your data source.

- They offer a wide range of transformations and can be run manually, on a refresh schedule, or as part of a Data Pipeline orchestration.

- While there are numerous benefits of using Dataflows Gen2, they are not a replacement for a data warehouse. They may not support row-level security and require a Fabric capacity workspace.

Exploring The Full Potential of Dataflows Gen2

Dataflows Gen2 revolutionizes the way you handle data. Serving as an efficient cloud-based ETL tool, it standardizes data, reduces data prep time, and promotes reusable ETL logic, all while offering a wide variety of transformations. With its capability to horizontally partition Dataflows, data engineers can create specialized datasets, optimizing data conversions. Although not an absolute replacement for data warehouses, Dataflows Gen2 carries a lot of potential in handling complex data tasks.

Learn about Landing data with Dataflows Gen2 in Microsoft Fabric

The text details how to use Dataflows Gen2 in Microsoft Fabric to get data into a data warehouse. Dataflows Gen2 allows the connection to various data sources, preparing and transforming the data for access, either directly landing it in your Lakehouse or using a data pipeline for other destinations. Dataflows are a type of ETL (Extract, Transform, Load) tool for executing scalable data transformation processes. They enable the extraction of data from different sources, its transformation via diverse operations, and loading it into a destination.

Using Power Query Online can provide a visual interface for these tasks. Importantly, a dataflow consists of all the transformations necessary to reduce data prep time, allowing it to be loaded into a new table, included in a data pipeline, or used as a data source for data analysts. Dataflows Gen2's objective is to offer a simple, reusable method for performing ETL tasks utilizing Power Query Online.

In an operational aspect, instead of using your preferred coding language, you can create a Dataflow Gen2 to extract and transform the data first, then load it into a Lakehouse or other destinations. The dataflow preserves all transformation steps, and adding a data destination to your dataflow is optional.

To perform other tasks or load data to a different location after a transformation, you can create a data pipeline and include the Dataflow Gen2 activity in your orchestration. Alternatively, you could use a Data Pipeline and Dataflow Gen2 for an ELT (Extract, Load, Transform) process. In this case, the data would be extracted and loaded into your preferred destination (like the Lakehouse) using a pipeline, before creating a Dataflow Gen2 to connect to the Lakehouse data, cleanse and transform it.

More links on about Landing data with Dataflows Gen2 in Microsoft Fabric

- Ingest Data with Dataflows Gen2 in Microsoft Fabric

- Microsoft Fabric's Data Factory offers Dataflows (Gen2) for visually creating multi-step data ingestion and transformation using Power Query Online.

- Quickstart: Create your first dataflow to get and transform data

- Jul 17, 2023 — Microsoft Fabric's Data Factory offers Dataflows (Gen2) for visually creating multi-step data ingestion and transformation using Power Query ...

- Microsoft Fabric: Loading On-Premises and External Data

- Aug 28, 2023 — We could use a data object (SQL Database, lakehouse or data warehouse) as a pre-landing zone. A dataflow Gen 2 would load the data from external ...

- Data Factory Spotlight: Dataflow Gen2

- Jul 10, 2023 — Overview Data Factory empowers you to ingest, prepare and transform data across your data estate with a modern data integration experience.

- Create a Dataflow (Gen2) in Microsoft Fabric

- In Microsoft Fabric, Dataflows (Gen2) connect to various data sources and perform transformations in Power Query Online. They can then be used in Data ...

- Microsoft Fabric: An End to End Implementation

- Jul 17, 2023 — Within Fabric, this can be completed in the 'Data Factory' experience. Here we have access to Dataflow Gen2 and Data pipelines.

Keywords

Microsoft Fabric specialist, Dataflows Gen2 expert, Power Query Online professional, Data Warehouse developer using Microsoft, Microsoft data transformation specialist.